Predicting Grades Early: A New Tool for Student Success at Leiden University

At Leiden University, we’re always looking for ways to help students shine, especially in their first year when the transition to university life can be tough. In our latest project, we’ve tackled a big question: can we predict final grades early enough to help students before they fall behind? Focusing on the Academic Literacy course in the International Studies programme, we’ve built a data-driven tool that uses online activity to forecast end-of-semester grades week by week. Here’s how it works, what we found, and why it’s a game-changer for supporting students.

Image credits to Deng Xiang.

The Goal: Testing Predictions in Practice

Can simple Brightspace data such as logins, video views, and quizzes, predict grades well enough for real-world teaching? Research has long shown that this is possible, but our pilot puts it to the test in everyday classrooms. We used data from 469 students in the 2023 Academic Literacy cohort to train and build the tool before proceeding with 458 students’ data from 2024 for testing. For privacy, all data was anonymised and students were provided the option to opt out of data processing by email. This pilot is intended as a starting point to spark educators’ discussions about practical uses and ethical concerns when it comes to data privacy.

Diving into the Data

We began with Brightspace logs, capturing every click, view, and submission. We then turned these raw numbers into insights, indicative of the extent of students’ efforts. This was achieved using features including:

- Progress Tracking: How many logins, quizzes, or videos has a student done, and how much of their total activity do each of these make up?

- Weekly Changes: Are they stepping up or slowing down from last week?

- Consistency: Do they keep a steady routine over a month?

- Class Comparison: How does their effort compare to the class average?

- Momentum: How are their activity levels going forward over time?

Building a Smart Predictor

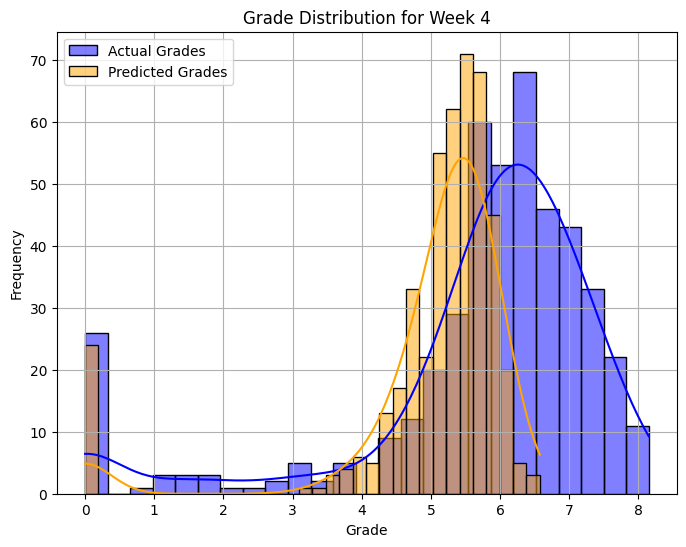

Our tool uses a two-stage model to predict grades. First, it identifies students at risk of a zero (like dropouts or no-shows) by finding patterns in a complex mix of online activities, correctly spotting 95% of these cases. Then, for students engaging with the course, it predicts their final grade based on many combined signals such as quiz and video interactions. Compared to randomly guessing the average grade of the last year’s cohort for every new cohort’s student, our model is more accurate throughout the semester and especially mid-semester, correctly predicting within one grade point for 62% of students. As expected, early weeks are less precise due to limited data but this foundation can be improved with tweaks.

What We Learned

Mid-semester, the tool really shines. It flags at-risk students (those trending toward a zero) accurately enough for advisors to step in with simple supports, such as check-in emails or study tips. It also emphasises patterns of success: consistent quiz-takers and video-watchers often score higher than their less consistent counterparts.

The good news is that predictions are stable for about two-thirds of students, meaning that advisors can rely on the trends for the most part. The predictions are not, however, flawless. Early on, the tool might alert too often, and later, it sometimes underestimates who will pull through. These insights emphasise that although the tool has considerable potential to support educators, it should not replace their judgement entirely.

Image credits to Arian Kiandoost.

Why It Matters

This pilot is a starting point and we can improve it with better data, methods, or factors including previous grades and performance. More importantly, it opens a conversation: how can educators use tools like this? Imagine weekly alerts boosting pass rates by tailoring support. We’re also tackling ethics—ensuring GDPR compliance, student consent, and unbiased alerts through collaboration with Leiden University’s data protection team. This balances data’s power with human care, making education adaptive and equitable.

Challenges and Next Steps

As a pilot, although showing great promise already, we knew it wouldn’t be perfect. Since we’ve only tested our tool on one course, we’re seeking trials in other courses across faculties with similar setups (such as optional tasks and robust Brightspace tracking). Going forwards, such trials are key, as is our continued commitment to data privacy, compliance, and transparency. Moreover, we’re excited to build advisor dashboards for weekly alerts. Picture a simple email: “Student X’s grade is trending low—suggest a check-in?” We’re also exploring ways to track long-term impact, establishing whether early help boosts overall success.

The Bigger Picture

This project is a step toward a more responsive university experience. By blending data with educator expertise, we can catch struggles early, tailor support, and help more students thrive. It’s not about replacing teachers but giving them a superpower: foresight. As we refine this tool, we invite educators to join us in shaping a future where every student gets the chance to succeed.

This project is part of the broader theme of learning analytics and data science. If you would like to learn more about this topic, have a look at our webpage!